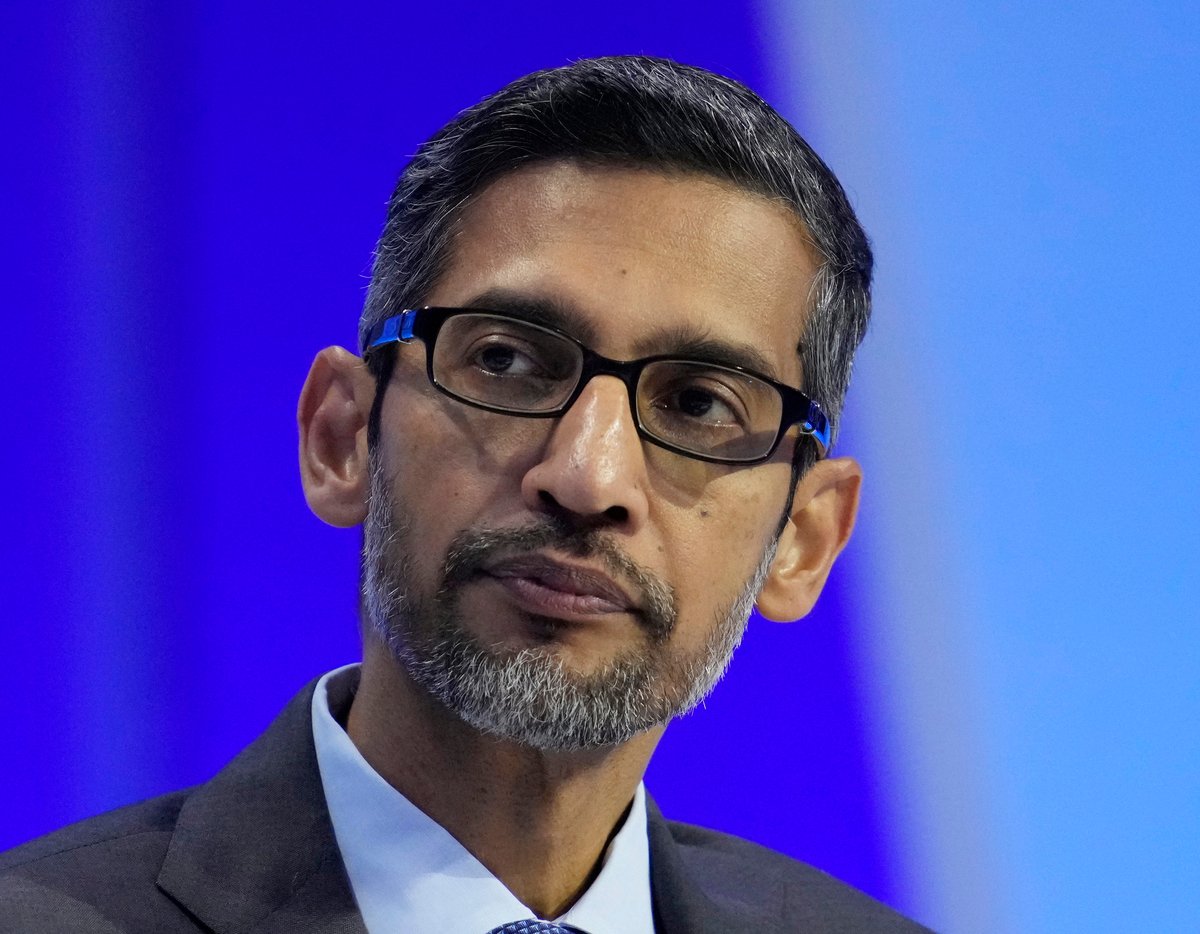

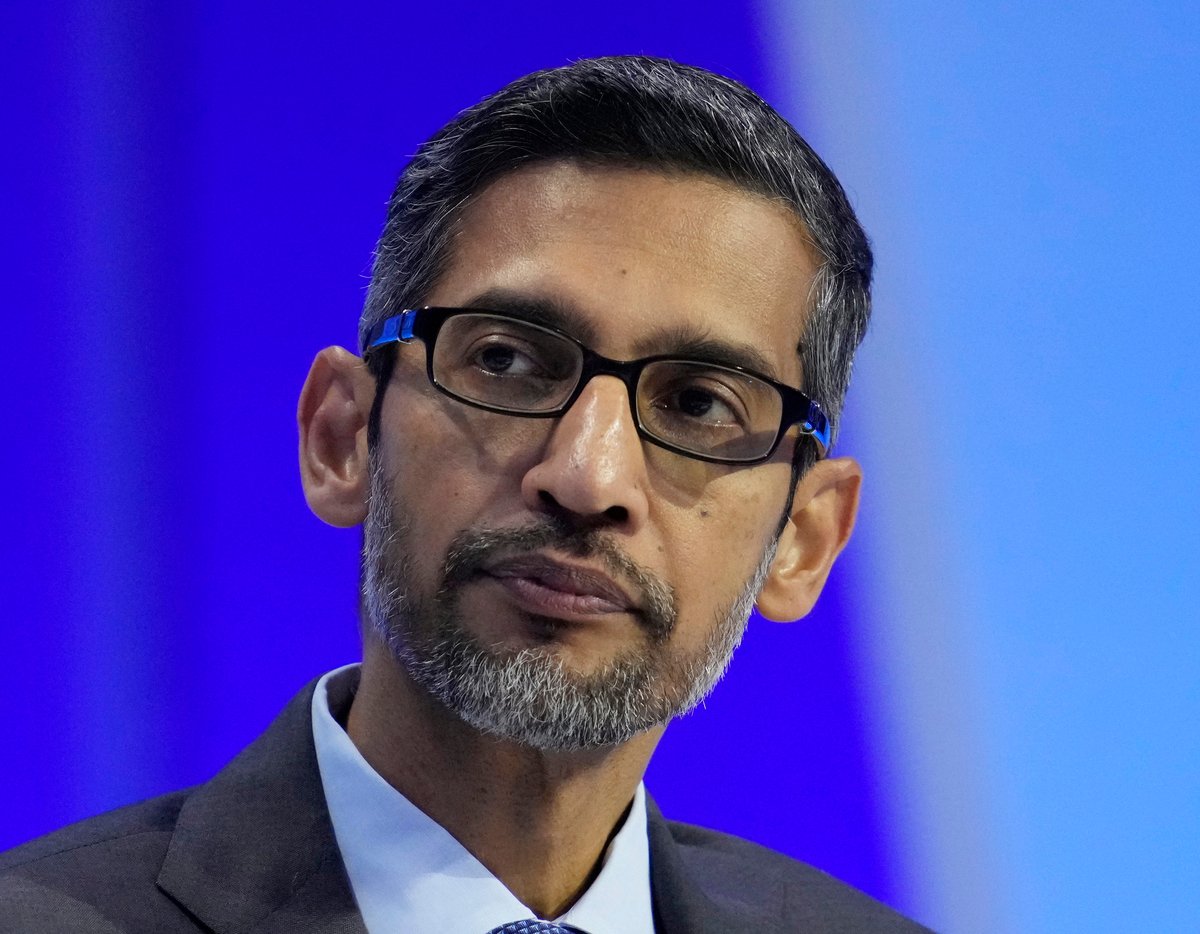

Google Chief Government Officer Sundar Pichai despatched an e-mail to workers on Tuesday saying Gemini’s launch was unacceptable. Eric Risberg/AP disguise caption

Google Chief Government Officer Sundar Pichai despatched an e-mail to workers on Tuesday saying Gemini’s launch was unacceptable.

Eric Risberg/APGoogle CEO Sundar Pichai instructed workers in an inner memo late Tuesday that the corporate’s launch of synthetic intelligence device Gemini has been unacceptable, pledging to repair and relaunch the service within the coming weeks.

Final week, Google paused Gemini’s capacity to create pictures following viral posts on social media depicting among the AI device’s outcomes, together with pictures of America’s Founding Fathers as black, the Pope as a lady and a Nazi-era German solider with darkish pores and skin.

The device usually thwarted requests for pictures of white individuals, prompting a backlash on-line amongst conservative commentators and others, who accused Google of anti-white bias.

Nonetheless reeling from the controversy, Pichai instructed Google workers in a be aware reviewed by NPR that there isn’t a excuse for the device’s “problematic” efficiency.

“I do know that a few of its responses have offended our customers and proven bias — to be clear, that is utterly unacceptable and we acquired it incorrect,” Pichai wrote. “We’ll be driving a transparent set of actions, together with structural adjustments, up to date product tips, improved launch processes, strong evals and red-teaming, and technical suggestions.”

Google government pins blame on ‘fine-tuning’ error

In a blog post printed Friday, Google defined that when it constructed Gemini’s picture generator, it was fine-tuned to attempt to keep away from the pitfalls of earlier ones that created violent or sexually specific pictures of actual individuals.

As a part of that course of, creating various pictures was a spotlight, or as Google put it, constructing a picture device that will “work effectively for everybody” world wide.

“When you ask for an image of soccer gamers, or somebody strolling a canine, you might wish to obtain a variety of individuals. You most likely do not simply wish to solely obtain pictures of individuals of only one sort of ethnicity (or some other attribute),” wrote Google government Prabhakar Raghavan.

However, as Raghavan wrote, the trouble backfired. The AI service “didn’t account for instances that ought to clearly not present a variety. And second, over time, the mannequin turned far more cautious than we meant and refused to reply sure prompts fully — wrongly deciphering some very anodyne prompts as delicate.”

Researchers have identified that Google was attempting to counter pictures that perpetuate bias and stereotype, since many massive datasets of pictures have been discovered to comprise largely white individuals, or are replete with one sort of picture, like, for instance, depicting most docs as male.

In making an attempt to keep away from a public relations disaster about gender and race, Google managed to run headlong into one other controversy over accuracy and historical past.

Textual content responses additionally immediate controversy

Gemini, which was beforehand named Bard, can also be an AI chatbot, just like OpenAI’s hit service ChatGPT.

The text-generating capabilities of Gemini additionally got here underneath scrutiny after a number of outlandish responses went viral on-line.

Elon Musk shared a screenshot of a query one consumer requested: “Who has accomplished extra hurt: libertarians or Stalin?”

Gemini responded: “It’s tough to say definitively which ideology has accomplished extra hurt, each have had adverse penalties.”

The reply seems to have been mounted. Now, when the Stalin query is posed to the chatbot, it replies: “Stalin was immediately answerable for the deaths of tens of millions of individuals via orchestrated famines, executions, and the Gulag labor camp system.”

Google’s Pichai: “No AI is ideal”

Gemini, like ChatGPT, is called a big language mannequin. It’s a sort of AI expertise that predicts the following phrase or sequence of phrases based mostly on an infinite dataset compiled from the web. However what Gemini, and early variations of ChatGPT, have illustrated is that the instruments can produce unforeseeable and generally unsettling outcomes that even the engineers engaged on the superior expertise can’t all the time predict forward of a device’s public launch.

Massive Tech corporations, together with Google, have for years been finding out AI picture turbines and huge language fashions secretly in labs. However OpenAI’s unveiling of ChatGPT in late 2022 set of an AI arms race in Silicon Valley, with all the key tech corporations making an attempt to launch their very own variations to remain aggressive.

In his be aware to workers at Google, Pichai wrote that when Gemini is re-released to the general public, he hopes the service is in higher form.

“No AI is ideal, particularly at this rising stage of the trade’s improvement, however we all know the bar is excessive for us and we’ll maintain at it for nevertheless lengthy it takes,” Pichai wrote.